52 weeks of BetterEvaluation: Week 37: Collaborative Outcomes Reporting

Collaborative Outcomes Reporting (COR) is an approach to impact evaluation that combines elements of several rigorous non-experimental methods and strategies.

You’ll find it on the Approaches page on the BetterEvaluation site - an approach combines several methods to address a number of evaluation tasks. This week we talk to Jess Dart, who developed COR. Jess is the new steward for BetterEvaluation’s COR page and, together with Megan Roberts from Clear Horizon, has provided a step-by-step guide, advice on choosing and using the approach well, and examples of its use.

Q. What are the essential features of COR?

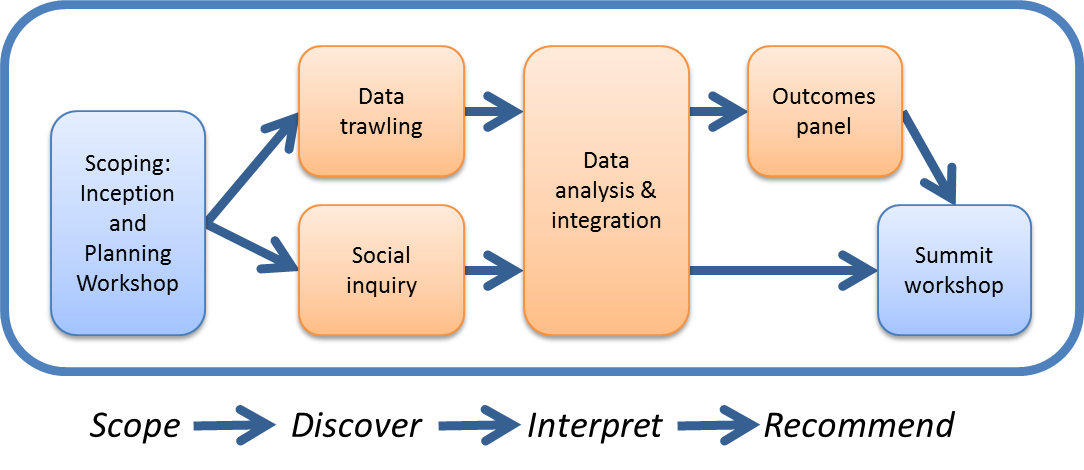

COR starts by developing a theory of change about the program or policy. It makes maximum use of existing data ("data trawl") before focusing on additional data collection to fill gaps. It uses the rigorous non-experimental techniques of contribution analysis and multiple lines and levels of evidence to make causal inferences without a counterfactual. It uses both an expert panel ('outcomes panel") and a stakeholder summit workshop to review and synthesise data into an overall evaluative judgement. And it produces reports that are brief, but with links to detailed evidence.

Q. What were the particular situations that COR was developed to address?

Collaborative Outcomes Reporting was developed to address some particular but very common situations:

- There is a need to tell a clear evidence-based story about the impact of a program where there was no comprehensive monitoring system in place at the start of the program

- It is not possible or logistically feasible to create a credible comparison group to provide a counterfactual

- It is difficult to synthesise diverse evidence into an overall evaluative judgement, and uncertainty about what data is available

- It is a complex program with a number of different outcomes that we are interested in finding out about

- We want to gain knowledge about what to do for the next phase of the program and do this in a way that engages the participants and encourages ownership

Q. What sort of programs are likely to be suitable for using COR?

COR is good for a variety of types of program. So far it has been used in agricultural extension, natural resource management, overseas development, community development programs and to evaluate the impact of Indigenous education programs. It is particularly good for programs that have a number of different stakeholders who are willing to engage in some collaborative inquiry. It works well for programs associated with behaviour or attitudinal change. It may not be an appropriate choice for a highly political program or one that has very sensitive issues – as there is a lot of open discussion in COR. It is also important that it is a singular “program” or “project”, and that the activities do all contribute to the same set of outcomes.

Q. How long does it usually take to do an impact evaluation using COR?

A typical timeframe so far has been about 3 to 4 months. More time would result in a more relaxed process! When we do this in an overseas development context the timing is a little different and depends on who is involved in the conduct and whether consultant teams need to fly in and out. Where we have facilitated this in an overseas setting we have needed two separate missions, one for the planning phase, and then a return trip to conduct the expert panel/ summit workshop. This scenario assumes local researchers are conducting data collection in the interval between the two missions.

Q. What sorts of expertise does an evaluator need to be able to use COR?

In addition to the usual skills of interviewing, data analysis and reporting writing - in COR evaluators need to have good facilitation skills. There are up to three facilitated workshops each which can be tricky in different ways. The planning workshop needs facilitation skills to generate an agreed retrospective program theory model; the expert panel needs very tight facilitation skills and an ability to mediate between different experts. The summit workshop can be a large group setting, so requires large group facilitation skills and an understanding of how to set up a good facilitation process.

Q. How do you decide who should be included in the expert panel and the summit workshop?

The expert panel is the place where we make definitive judgements about impact and the contribution of the program. Here it is important to find 3 to 6 people who have intimate knowledge of the relevant topic and are familiar with the type of data we will be presenting. This needs to be balanced with them being independent and having no conflict of interest. So they are not program staff, or people who were involved in the program staff, instead they are often from a local university or peak body group. In some programs there may be no obvious ‘scholarly’ experts. Instead it may be more appropriate to invite local leaders or indigenous elders. We have occasionally substituted the expert panel for a citizen jury.

The summit workshop is the place where we invite a broader range of stakeholders to make sense of the data and develop a set of recommendations. It is important to think carefully about who should be invited. Often we invite: the people who were interviewed in the process; the program staff; the funders or donors and anyone who has a stake in the program. The summit workshop is designed to encourage people to understand each others’ views and to agree on a way forward, so it is OK to have people with different views and levels of involvement. It is a great way to encourage ownership of the recommendations and can lead to good results in terms of utilisation of findings and uptake of recommendations. A typical size is anywhere between 20 and 200 participants.

Q. What do you do if the participants at the expert panel can't agree on the overall evaluative judgement?

In our experience of running over 20 expert panels, this scenario is rare, in fact, so far it has only happened on one occasion. Careful facilitation and a focus on evidence usually enable the facilitator to reach a consensus. On the occasion were it did not work, there were 10 participants at the expert panel (too many) and the topic was politically sensitive (not a good choice for COR) and the experts were in strong disagreement about the value of the program, it was also questionable as to whether they had conflict of interest. Here the different perspectives were recorded and presented in the report and it was not possible to provide a unanimous conclusion.

Q. Do you have to do all the steps in COR – what if we can’t get people together for the workshops?

The COR approach is very flexible - we have modified it in various ways to suit the particular context. For example, we have substituted the expert panel for interviews with key experts where we were unable to bring them together.

Resources

One of the tasks involved in understanding causes is to check whether the observed results are consistent with a cause-effect relationship between the intervention and the observed impacts.

All impact evaluations should include some attention to identifying and (if possible) ruling out alternative explanations for the impacts that have been observed.