52 weeks of BetterEvaluation: Week 44: How can monitoring data support impact evaluations?

Maren Duvendack and Tiina Pasanen explore the issue of using monitoring data in impact evaluations.

Maren and Tiina work on the Methods Lab, a programme aiming to develop and test flexible and affordable approaches to impact evaluation. In this blog they discuss some problems with using monitoring data for impact evaluation, and suggest some solutions.

Impact evaluations (IEs) are very expensive, right? There seems to be a common understanding that rigorous and well-designed IEs (not only Randomised Control Trials but other IE approaches as well) are anything but cheap. This has led to a number of donors and programmes looking for alternative, more flexible and cost-effective ways to evaluate the impact of a development programme or policy and provide evidence on the causal link between a programme and outcomes. For example, Australian Aid is funding a Methods Lab, conducted by the Overseas Development Institute, in partnership with BetterEvaluation, to trial such methods for impact evaluation.

One of the strategies to reduce the costs of impact evaluations that has often been suggested is to use existing monitoring data – often the primary focus of an existing M & E plan. In many ways this makes perfect sense as almost all programmes collect monitoring data – some collect mountains of them – so why not use them instead of gathering a new set of data?

Unfortunately, three main problems exist.

1. The quality of existing data is often not good enough for an IE – collecting high-quality data systematically is harder than one might think!

2. Monitoring data does not always provide information about impacts, and sometimes not even about intermediate outcomes. Instead the focus is often on inputs, activities and outputs which provides little evidence about the intermediate outcomes that are understood to lead to the intended impacts.

3. Monitoring data usually address a different set of questions to those used in impact evaluations. They usually focus on describing how things have changed without answering questions about the causal contribution of the programme or project.

So, for example, a vaccination programme might monitor the number of vaccinated children (outcome), while an IE would likely focus on child mortality rates in the region and changes in beneficiaries’ lives. Or a cash transfer programme might only monitor children’s attendance rates in school, but an IE would need evidence on improvements in learning. Increased attendance is a good outcome, but it’s not yet an impact.

So, how can we improve the usefulness of monitoring data for IE?

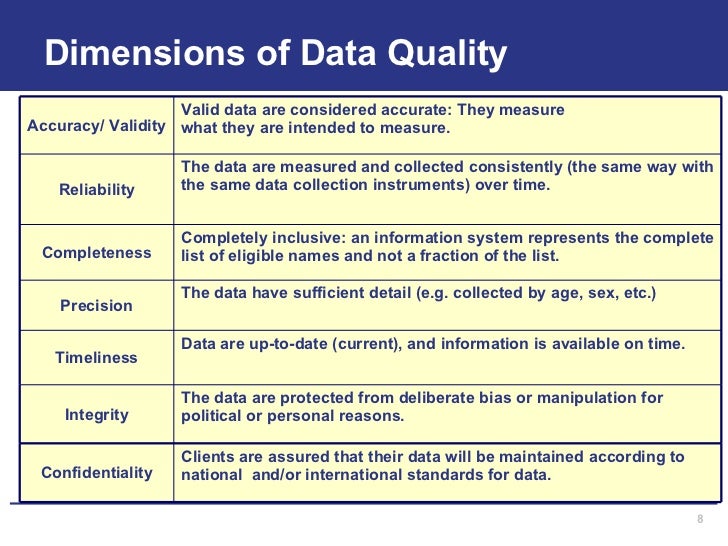

Here are three strategies, one for each of the three problems above: To tackle the data quality problem, one strategy is to explicitly address quality requirements in the design stage – including the requirements of a potential IE. A number of dimensions (such as accuracy and reliability) should be considered - this table from MEASURE Evaluation provides a useful categorisation. Without high-quality data, any subsequent analysis is likely to produce biased and misleading results.

For the information needs problem, a strategy is to link M&E indicators to your theory of change. Examine how well the current M&E questions and data link up to the programme’s theory of change (ToC): are the existing indicators appropriate for measuring the key dimensions of the ToC. If you cannot map the M&E indicators across the various elements of the ToC, then they are not set up to answer questions about impact.

Finally, for the causal inference problem, a strategy is to plan an ‘impact-oriented M&E framework’ from the start. Producing quality data that links to the ToC is good practice but it may not be enough; sometimes the IE questions and requirements have to be considered from the beginning, to produce a rich seam of data running through the entire project. In the long run this can reduce the cost of the IE through the on-going collection of indicators of impact and linking them to intermediate outcomes and the quantity and quality of implementation. Monitoring data that is linked will be able to able to distinguish failures in implementation and failures in theory in cases where expected impacts are not occurring – which will be critically important for an impact evaluation.

There are very few examples of the latter strategy in practice but the Methods Lab, mentioned above, is starting to work with a number of programmes to support the design of impact-oriented M&E frameworks. We don’t have all the answers yet but we will share what we learn through BetterEvaluation.

We are convinced, however, that M&E frameworks that truly consider both Monitoring AND Evaluation, and anticipate the needs of impact evaluations, can improve the quality and reduce the cost of subsequent impact evaluations.

Resources

Introduction to impact evaluation: Patricia Rogers identifies the different types of questions that an impact evaluation needs to ask.

Linking monitoring and evaluation to impact evaluation: Burt Perrin discusses ways of designing and using monitoring information to improve the quality of impact evaluation.

Synthesise data across evaluations: These methods answer questions about a type of intervention rather than about a single case – questions such as “Do these types of interventions work?” or “For whom, in what ways and under what circumstances do they work?”

Photo: Workshop in Cambodia, Arnaldo Pellini/Flickr

'52 weeks of BetterEvaluation: Week 44: How can monitoring data support impact evaluations?' is referenced in:

Blog