Impact evaluation: challenges to address

In development, government and philanthropy, there is increasing recognition of the potential value of impact evaluation.

There is dedicated funding available and specific initiatives to develop capacity for both commissioning and conducting impact evaluation, including supporting use of the findings.

But some important technical and organisational challenges remain. And this is why, in the coming year, impact evaluation is one of the areas the BetterEvaluation team is particularly focusing on in terms of Research & Development and Evaluation Capacity Development.

Do you have ideas on how to respond to the three challenges below? Or other challenges that should be added?

1. Ensuring a comprehensive definition of ‘impact’

The OECD-DAC definition of impact is deliberately comprehensive: ‘positive and negative, primary and secondary long-term effects produced by a development intervention, directly or indirectly, intended or unintended’

This definition is widely accepted and quoted by development agencies and government departments, but current evaluation practice often falls short, instead looking only at relatively short-term, intended direct effects.

Better impact evaluation will pay serious attention to methods and processes for:

- Anticipating possible unintended impacts, using methods such as negative programme theory

- Identifying additional unintended impacts that were not anticipated, using processes such as reflective practice, open-ended participant feedback, observation

- Clarifying values of key stakeholders in terms of what are seen as positive and negative impacts

- Getting evidence of unintended impacts

- Tracking results over longer time periods, using both evaluations with longer timeframes and large-scale longitudinal studies which can be used for many evaluations

We need to learn more about the various methods and processes available to do these – and what it takes to implement them well and gain support for using them.

2. Ensuring a comprehensive definition of ‘impact evaluation’

An impact evaluation can be broadly defined as an evaluation which evaluates impacts. One of its essential elements is that it not only measures or describes changes that have occurred but also seeks to understand the role of particular interventions in producing these changes.

The OECD-DAC has a broad definition of causal attribution (sometimes referred to as causal contribution or causal inference):

“Ascription of a causal link between observed (or expected to be observed) changes and a specific intervention.”

The BetterEvaluation team likes this definition because it does not require that changes are produced solely or wholly by the intervention under investigation. It takes into consideration that other causes may also have been involved - for example, other programmes/policies in the area of interest or certain contextual factors. In real life, this scenario is most often the case.

The OECD-DAC definition can also encompass a broad range of methods for causal attribution. The working paper by Elliot Stern and his team for DfID on “Broadening the range of designs and methods for impact evaluation” shows how there are other strategies for causal attribution that can be used, not only counterfactual approaches.

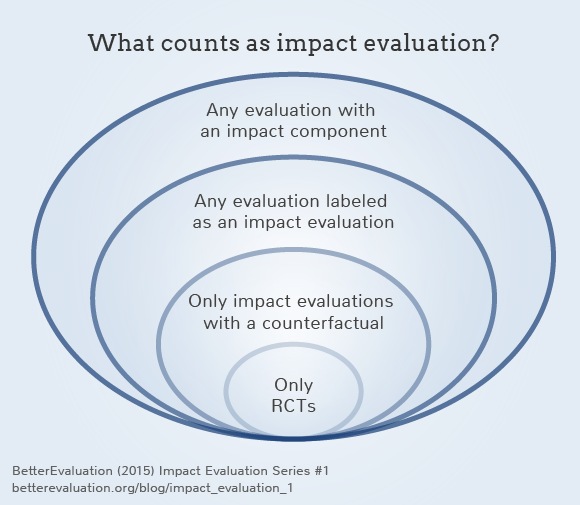

The broadest view of impact evaluation is any evaluation which evaluates impact – even if it is not labelled as an impact evaluation, and has elements of other types of evaluation as well, such as needs assessment, and process evaluation. This type of impact evaluation is often found in government, where a comprehensive evaluation is undertaken, especially of new policy initiatives. A slightly narrower definition is anything which is primarily focused on evaluating impact and which is labelled as an impact evaluation. Even narrower is to only include evaluations which have used counterfactual approaches to causal attribution, or only randomised controlled trials (RCTs).

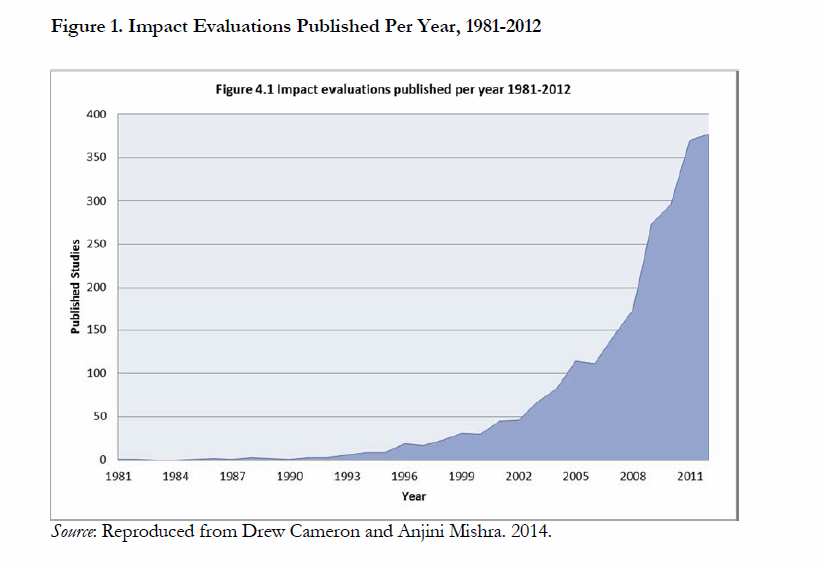

Given these different possible definitions, it is interesting to consider the following graph, by Cameron and Mishra (and reproduced in the recent policy paper on impact evaluation by Ruth Levine and William Savedoff) which shows a rapid rise in impact evaluations being conducted – but does not explain which definition is being used.

Impact evaluations are also often narrowed to focus on the average effect (asking “What works?”) rather than paying attention to variations in impact (asking “What works for whom in what ways and under what circumstances?). This can have serious consequences in terms of equity – a programme which is effective on average might increase equity gaps if it is less effective for the most disadvantaged. And an impact evaluation which fails to address equity issues might inadvertently encourage programs and policies which make it worse.

Asking simply “what works” without paying careful attention, conceptually and empirically, to the influence of context, also makes it harder to apply findings to new settings and times.

Better impact evaluation will use methods, processes and designs which can:

- Develop logic models and theories of change which recognise the various contributions to impacts

- Undertake causal attribution in ways which are appropriate for the situation

- Go beyond the average effect – using approaches such as realist evaluation

We need to identify existing examples of impact evaluations which have addressed these issues – and encourage more to do so.

3. Ensuring attention to supporting different types of use of impact evaluation

Impact evaluations have been shown to be useful in informing decisions about whether to continue a programme or policy, and choices about which policy or programme should be funded. They have been used to guide the design of programs and policies and can even improve implementation by identifying critical elements to monitor and tightly manage.

But in many cases there is insufficient attention paid to clarifying the intended uses of impact evaluation and supporting this. In particular, it is often not clear how the findings from an impact evaluation in one setting should be used in other settings (external validity, or going to scale).

Better impact evaluation will use methods, processes and designs which can:

- Support translation of findings to new settings – not just transfer

- Build in learning from implementation and scaling up – in the language of science, knowledge goes from bench to bedside and vice versa.

We’ll be exploring these challenges in forthcoming blogs:

- Our guest blogger Michael Quinn Patton ruminates on the notion of “best practices”- and suggests avoiding the term altogether

- Greet Peersman and Patricia Rogers discuss a research agenda for impact evaluation, following up a discussion at a recent conference on rethinking impact evaluation at the Institute for Development Studies

- Along with Nikola Balvin from the UNICEF Office of Research, we introduce new resources on impact evaluations, including 13 methods briefs and 4 animated videos

While we are featuring impact evaluation in the month of January, we will be revisiting this type of evaluation throughout the year and report back or invite input on specific impact evaluation-related projects.

In 2015, we’re presenting "12 months of BetterEvaluation" - with blog posts focusing each month on a different issue. This is the first in a series on impact evaluation, our focus for January.

'Impact evaluation: challenges to address' is referenced in:

Blog

Theme