How evaluation embraces and enriches adaptation: A UFE approach

In this guest blog, Sonal Zaveri (with input from the DECI team) discusses why a Utilization-Focused Evaluation (UFE) approach is a natural fit for adaptive management, supporting reflection and course correction.

Many projects face uncertainty and changes in implementation, even when they are carefully planned. This can be because they are seeking solutions to complex problems. It can be because the environment in which they operate is ever changing, sometimes in unpredictable ways. Or it maybe because projects and programs have not identified and questioned the assumptions underlying their implicit or explicit theories of change. In such cases, it’s essential that, as we implement, we are agile in making any changes needed during the course of the project – e.g. through the use of adaptive management (see previous blogs by Patricia Rogers, Arnaldo Pellini and Fred Carden).

In the context of a a wider research project called DECI (Developing Evaluation and Communication Capacity in Information Society Research), we road tested the UFE approach to learn how it could it could be used for the evaluation of a pilot of a mobile app.

Using UFE for adaptive management

The UFE approach provides a way in to enable evaluative thinking because it forces reflection on the why, what and how of evaluation. The UFE process embraces both evaluative thinking and stakeholder engagement – it encourages stakeholders who are directly invested in the project (not necessarily funders) to think about their work and what they want to achieve from it.

Evaluative thinking and stakeholder engagement do not just happen. They need facilitation, and for this we provided mentoring to guide the process. We found some ‘triggers’ very useful to start this reflective process:

- asking what are the two or three key questions that the evaluation could and should focus on

- making decisions on who would be most interested in these questions (or vice versa – asking potential users of the evaluation, what they are interested in knowing)

- negotiating with these identified users, how they plan to use and communicate these findings.

These three areas of decision making were not linear but iterative and using them naturally led to an exploration of the theory of change - Was it changing? Had it changed or did it need to be changed?

Applying UFE in practice

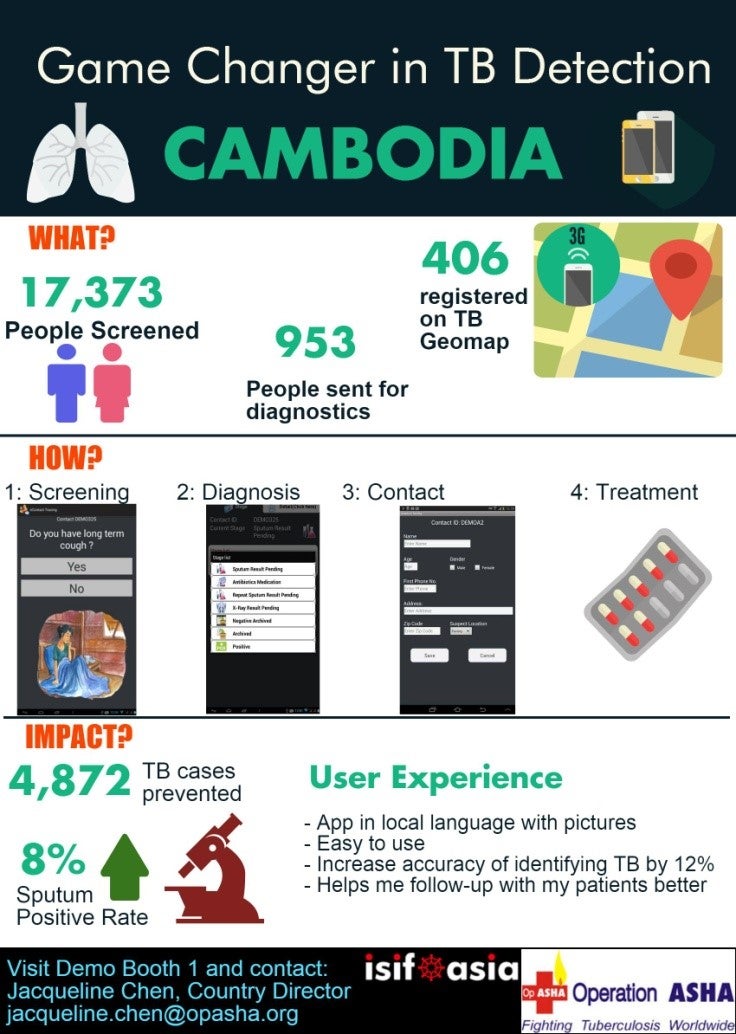

In the evaluation of Operation ASHA’s pilot of a mobile-based app for tuberculosis detection, adopting a UFE approach allowed OpASHA to reflect on what they wanted to evaluate, who would use it and for what purpose.

The initial aim of the evaluation was to evaluate whether the use of technology had an impact on the TB detection in areas of intervention, and then to use these findings to advocate the Cambodian Government to expand the use of ICT for TB detection and treatment. Using the UFE approach resulted in a wider line of questioning.

The evaluator facilitated an internal reflection process at the start of the evaluation process regarding the overall objective of OpASHA, including management and donor expectation, as well as encouraging discussions with the government regarding their processes for adopting innovative tools, including constraints, availability of current methods and requests for competing methods and tools.

As part of this, the assumptions underlying the theory of change were questioned, such as whether the app, though showing promising initial results, had addressed barriers that might prevent an efficient scale up in the future, such as the community’s acceptance of mobile data entries or field supervisors’ ability to use the app or their apprehension regarding real-time tracking of their level of effort in communities . OpASHA changed its evaluative key questions to:

- How has the App contributed to TB care in terms of screening and case notification compared to conventional (paper-based) methods?

- What are the challenges and benefits for Field Supervisors in using the App for TB care and service delivery?

- What are the lessons learnt for scaling up?

From these discussions, it became was clear that OpASHA ’s management needed to use the findings from these questions to review their strategies before advocating to the government.

Learning and adapting: Single and double loop

What emerged from the UFE process in terms of single loop learning (or, lessons for course correction) was that OpASHA needed to understand the human interface of the app better. They had assumed that the real time data regarding coverage and comparison with conventional data collection (using paper forms) would be adequate evidence to advocate with the government. However initial results showing variations in the reporting of TB cases across districts indicated that the app was not achieving widespread usage consistently.

This led to a realisation that the app’s interface may need to be revisited to address this, with a particular focus on how it was being used by Field Supervisors. Additionally, OpASHA needed to streamline and add the apps that they had developed with other funders that would integrate detection with treatment and follow up. This single loop learning enabled a targeted approach to the expansion of and quality control of their project, including refining the app using international protocols to address data gaps.

The UFE process also provided double loop learning, questioning the goals and theory of change of the project. During the discussions with the intended users of the evaluation and (stakeholders in the government), it became evident that advocacy with the government was not working with their current strategy. OpASHA needed to go beyond the pilot districts to a larger coverage for the government to even consider the possibility of using mobile apps. Costing of the tablets, training of field staff and added value needed to be factored in as well. Further, they needed to engage with the Technical Working Groups within the health department and consult with the WHO TB officer to communicate their tools and added advantage.

The learning led to several changes in OpASHA’s Theory of Change that were operationalized before the project was completed. These included: strengthening the capacity of field staff, sensitizing the community to mobile applications, synergizing the detection app with other related TB treatment apps to provide a ‘beginning-to-end’ detection, treatment and follow-up app, geographical expansion of the pilot to assess scale up and, for the first time, developing a communication strategy to influence decision makers and address: what are the purposes of communication, who are the potential users and what information did they need and how.

By adapting the project in line with the lessons that emerged during the UFE process, OpASHA was able to course correct and finished the pilot in a much better position to advocate to the Government.

Lessons for using evaluation to support adaptive management

One of the key lessons we found in the DECI project was that program staff needed a guided process to understand, reflect on and adapt to changing circumstances, and as such, our role as evaluators evolved to include facilitation, mentoring and coaching project staff to make sense of their work. The UFE included a meta-evaluation step where the organization reviewed the evaluation journey and a reflection process that allows them to appreciate the course-correction and the new capabilities that they have acquired – both of which helped to consolidate the evaluative thinking process.

What are your own experiences?

Although the above discusses the use of a utilization-focused approach, we would suggest that any learning oriented evaluation approach such as outcome mapping, developmental evaluation and others would provide the processes to enable projects to adapt to and respond to changing environments.

We would be very interested in learning from others who have used such learning-reflective approaches.

We look forward to hearing from you!

Related content

This is part of a series

'How evaluation embraces and enriches adaptation: A UFE approach' is referenced in:

Blog

Method

Theme